Facebook is once again at the center of controversy, this time due to accusations by a whistleblower of prioritizing profit over monitoring hate-groups and hate-speech. The lack of transparency over content moderation has often been flagged as one of the key issues with the social media giant that also owns Instagram and WhatsApp. The Intercept published a 100 page list of ‘Dangerous Individuals and Organizations’, which Facebook circulates among its employees for moderation of contents on its sites .

Allegations of ineffective moderation of hate-speech on Facebook owned platforms have often been countered by the company’s claim that it judiciously monitors all hate-speech on the platform and pledges to do more in order to curtail violent rhetoric. The documents reveal a key pattern to Facebook’s moderation practices. Firstly, the vast majority of the listed individuals and organisations are from the Middle East and North Africa region, especially with Arabic names, reflecting an embedded alignment with US foreign policy objectives rather than concerns of its users, with the language of the document clearly reflecting a post-9/11 cultural bias. Secondly, a conspicuous absence of the large number White Supremacist, anti-immigrant, and neo-Nazi organisations that have been responsible for the numerical majority of terrorist attacks in Europe and North America.

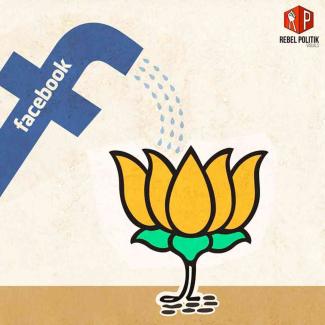

The revelations come in the footsteps of the allegations made by Frances Haugen, a former Facebook data scientist and whistleblower, in her complaint to the SEC. The complaints include the company misleading investors and users regarding its safety practices, disproportionate focus on the US with 87% of moderation resources despite accounting for less than 10% of active users, and the lack of flagging of hate-speech with 3-5% of hate-speech being cracked down upon. An internal report by Facebook titled ‘Adversarial Harmful Networks – India Case Study’ demonstrates clearly the extent of hate-content and fear mongering on the platform against Muslims. Another sampling survey in West Bengal demonstrated the extent of fake news being shared on the platform, with the company’s limited access to Bengali and Hindi language moderation resources. The complaint also cites a third document titled ‘Lotus Mahal’ that shows how it was aware of the functioning of the BJP-IT cell flooding the platform with duplicate and fake accounts in order to boost its user engagement.

While Facebook categorises India as a ‘Tier One’ country reflecting a high risk of societal violence, its actions to prevent its own platform from being used for said violence have been limited. The company has chosen a deliberate strategy of turning a blind eye to hate-speech by the Sangh linked organizations like Bajrang Dal, precisely due to fear than any crackdown could bring physical retribution against its India-based employees . It was also revealed last year that Ankhi Das, who was formerly the top public-policy executive in India, had opposed any application of hate-speech rules to Hindu nationalist politicians and groups.

Engels had used the term ‘social murder’ in The Condition of the Working Class in England to demonstrate how the members of the working class was being systematically being pushed to their deaths for capitalist enterprise to be profitable. In the modern age of ‘Digital Capitalism’ literal murder of working class and minorities are being encouraged for social media companies to be and remain profitable.

Charu Bhawan, U-90, Shakarpur, Delhi 110092

Phone: +91-11-42785864 | Fax:+91-11-42785864 | +91 9717274961

E-mail: info@cpiml.org